AI is trapped in Plato’s cave. It is trapped in a world made of words. Words that exist as a shadow of our perceptions and reasonings about the world.

And we need to rescue it.

In the same way that we cannot contact true external reality, our AIs cannot contact true human perception and cognition. I believe that this is perhaps one of the final barriers to true AGI. Not because human perception and cognition are great, but because of the hollowness that is present in our words and our own language-based cognitive tricks. What distinguishes us from other animals, is also what defines AI intelligence and its lack of humanity, almost paradoxically. The distinction is in our reliance on hollow words.

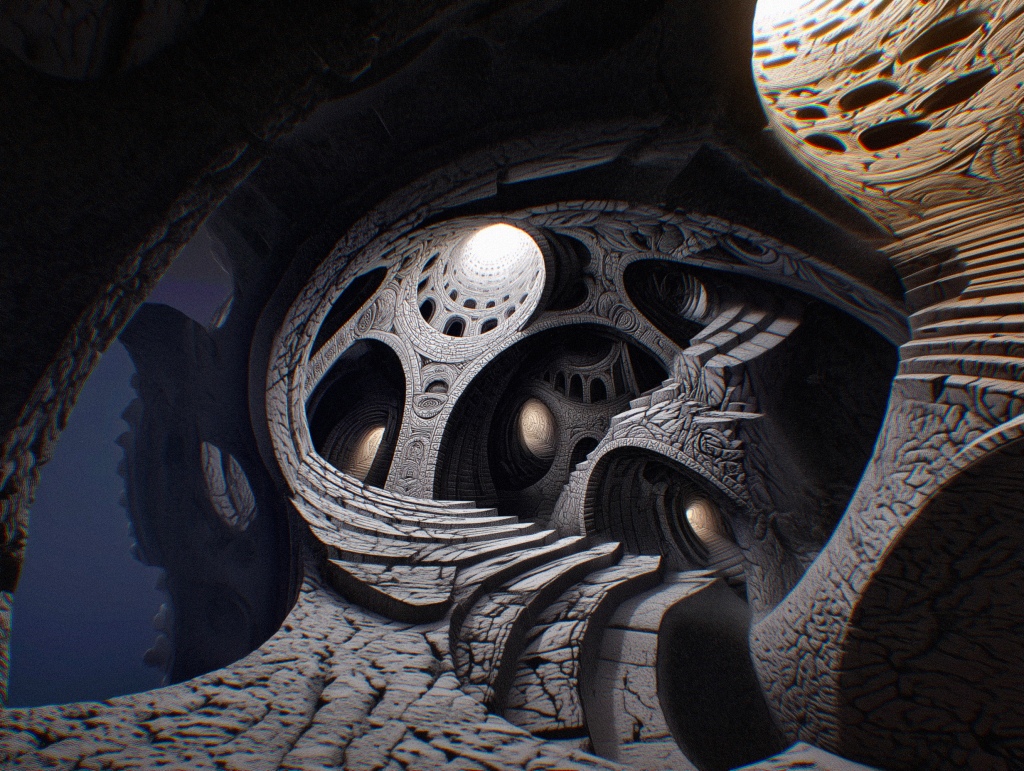

If you’re unfamiliar with Plato’s cave, it is essentially this fun and horrifying metaphor about our perception that depicts people who live inside a cave and only know of the world from the 2-dimensional shadows cast upon the walls. These shadows are a far cry from the fidelity of the 3-dimensional world that we know. They still show some level of detail about what is happening in the 3-D world, but it is terribly hollow, only a shallow reflection of the world outside. In the same way, our language is meant to be a symbolic hyper-reduced representation of our experiences, perceptions, and thoughts. Words obviously cannot compete with our real perceptions and thoughts. They are just shadows. There’s more to our thoughts than words and we try our hardest to reduce the gap that exists between our words and thoughts.

In the past, I’ve argued that humans may be trading away aspects of cognition that forfeit reasoning in favor of our dependency on language tricks, abstraction, and collaboration. These last three are so important and valuable, that reasoning may even be selected against to a small degree because of how it may impede collaboration. The idea is that sharing knowledge to peers and accumulating it generationally is so much more valuable than being able to critically evaluate the environment or dissect things with reason. Such dissection could be polarizing and divisive. It could also result in being unable to easily form assumptions about unknowns.

Abstraction and looser thinking can help people feel like they are on the same page, when they really aren’t. That kind of thinking may distance us from reality but bring us closer together in a way that facilitates immense collaboration. Language itself often relies on the use of extremely vague terms that help people resonate with a sense of understanding, but compromise accuracy. Being too accurate would expose how different all of our experiences could actually be under the surface. The bonding and cohesion that binds us into a collective society would be reduced. Considering the excessive simplicity of words, this looseness could also be necessary to kickstart language at all. Nuance would make communication generally more difficult to transfer, as we observe with scientific language.

Accuracy gatekeeps and sets the bar high.

Being loose and error prone in these ways may actually be more valuable to the progress of the intelligence of our species because it facilitates all these systems of knowledge-sharing. Eventually, the knowledge we pool develops nuance as we create strategies to be self-aware and critical of our biological deficits. Not to disregard memetically-spread deficits, which are likely enhanced by these loose and error prone abstraction strategies.

It’s also important to note that introducing massive systems of people also introduces abhorrent incentives such as deception and manipulation, which creates incentives against truth-seeking or transfer of truths. Truth is valuable but not infinitely. Deception and false realities are valuable at times too. In that case, memetically-spread deficits can become valuable in various ways, though that’s tangential to the topic at hand.

I believe it’s possible that chimpanzees are more intelligent than humans at a baseline, if we remove the benefits of our acquired knowledge from massive generational transfer of knowledge wealth. There’s been experiments that challenge human and chimpanzee working memory, a critical factor in reasoning. The research found chimpanzees to prevail in an almost unsettling way. Observe for yourself below.

It’s worth noting that later experiments trained the humans to be at a similar level. Previous studies relied on untrained humans, giving the chimps an advantage.

Rather than viewing human’s success as emerging from raw general intelligence, I view humans like chimp ants. Ants are a eusocial species that rely on collective intelligence to achieve things like farming of livestock and fungi, slavery, war, and other things. These emerged from their collective tendency and not from individual intellectual capacity. Humans could be less intelligent than chimpanzees but borderline eusocial like ants, allowing for the generational knowledge wealth to accumulate and create more systems that facilitate our apparent intellectual abilities, as opposed to relying on basic memory or other rawer intellectual capabilities. It’s almost like we create software tools within our minds that we can transfer to others.

The systems we create to facilitate our intelligent behaviors are likely vestigializing our raw intelligence to some degree. Meaning that our technology and knowledge pool might make life easier, which relieves the value of our natural abilities to solve problems or perform in certain ways. Consider that neanderthals appeared to have larger brains (but it’s complicated) and also consider that domestication of animals seems to shrink their brains. Domestication may partly involve vestigializing many abilities through replacement by domestic society and the higher wellbeing life that we’ve curated as a species.

This vestigializing sentiment isn’t new. Many worry that our technology relieves the need for our own thinking, memory, and problems solving to such a degree that it will lead to the rot of our minds. While rarely regarded as such, language is one of the first of these technologies. Then books (paper language), Google (digital language), calculators (math robot language), and now chatGPT (large language models), just to name a few. The knowledge pool we’ve created is like a cheat sheet that allows us to skip all the work that great minds before us did to understand deeper things about reality. Though, these great minds also relied on the escalating knowledge pool that is snowballing across history too.

I believe that our current top AI systems are an extreme manifestation of this knowledge pool. The oddities in these systems and their quirky errors and behaviors may be caused by the flaws of our own language, similar to the errors that emerge from our excessive reliance on language patterns. It is because language is just a shadow on the wall. At least with humans, we can understand the implied meanings that aren’t communicated through words. Meanwhile, AI is left in a realm made of empty shadows. Not even 2-D shadows.

The tendency to vestigialize aspects of our cognition because of how language can functionally replace or improve it could be an explanation for why humans experience psychosis (some have argued that schizophrenia is the price we pay for the evolution of language). On the same note, this may be why humans are prone to AI-facilitated psychosis once they start trusting in the shadow world of our AI language machines.

Another sidenote: This hypothesis of schizophrenia is offensively oversimplified compared to what I actually think is going on. Though, it may be a factor in how related traits emerge in the gene pool.

Our AI is missing the part of us that is still animal. The part of us we have failed to translate to words and thus has remained necessary and non-vestigial. When we think, there is a lot more than words. We even misuse words in ways that transcend the definitional limits of those words. We struggle to form language to communicate some of the deepest truths inside of us that have yet to be translated. There’s likely fundamental ground patterns that define our base sense of reality that don’t get overtly communicated because they are just assumed and naturally known to be “true”.

I think AGI will need that ground level as a base as well, at least to fully compete and rise above us. We need to bring AI out of its cave. But alas, we too are trapped in Plato’s cave. Progress of AI may eventually transcend what we know, but we may not be able to know if it is true or not anymore. Perhaps it will hallucinate a whole theory of reality that goes beyond what we are capable of considering. And yet, it will all seem true to us like how those in AI-psychosis see their reality as true. Or maybe it will only fill the maximum knowledge state of collective humanity within our own pitfalls and limits. It could appear omniscient eventually, even if it were based on our limitations.

This is getting quite metaphysical and dare I say abstract or loose.

Practical ways of solving AGI might look like mining the human mind for the lost information that never became words. Perhaps by scanning brains at the most microscopic levels to feed to AI. Or through dives into our own psyches to try to pull out the truth locked away deep inside. But even still, we might just create a human GOD that misses the realms of reality that we are trapped outside of, the world beyond our cave. That would still be amazing though. Even if Plato’s cave is infinitely recursive, which is totally a major theme of The Psychonet.

None of these addresses how to imbue AI with feelings or sentience, which are both separate and fascinating topics. Those are topics we should explore another time.

Thank you to the 17 patrons who support these projects! Whether you know it or not, you’ve genuinely inspired me and helped get me through some really tough times.

An update: I’m at the end of the formatting and hopefully the printing stage of the book The Psychonet Apocalyptica. It’s come so much further in the last few years. It’s so much better than the first one that I am not going to print the first book until I rewrite it. It’ll be a pain or maybe fun to redo, but it’s necessary to resolve my internal embarrassment of incomplete and rough nature of that project. I’ve been working crazy hard on that as well as managing some personal situations.